Open Sorcery AI

take my money!

So…this happened yesterday. Another day, another open source banger.

To which I say — first of all, you are five days late to my birthday, but I’ll take that. Second of all, a 32B reasoning model that rivals R1 (a 600B+ model)?!

What kind of sorcery is this?

Well, it’s the open kind.

You get it? Open Sorcery, like Open Source? I’m not proud of this pun so it’s ok if you don’t like it. But as a quick anecdote — the Chinese AI community has long referred to training LLM as 炼丹 (alchemy), so I feel justified calling the open release of LLM as open sorcery.

In this blog post, I aim to demystify another part of the sorcery, namely

How do open source AI make money?

But first, let me briefly go over the benefits of open source AI. So without further ado…

Why open source

What a crazy quarter for open source AI! We started the year with a wake up call from DeepSeek, then we had Alibaba open sourcing the Wan 2.1 video model, and finally, the Alibaba’s QwQ reasoning model release yesterday.

Not only big corporations are pouring resources into open source AI, startups are emerging quickly as well. Recently, I came across a really cool startup called Oumi (Open Universal Machine Intelligence), who just raised $10 mm and is committed in building an unconditionally open AI platform. (Note that open AI is not to be confused with OpenAI.) By unconditionally open, Oumi strives to build a platform that “offers state-of-the-art foundation models with open code, open data, open weights—and open collaboration,” as opposed to just releasing the model weights like Llama.

In preparation for this blog post, I had a chat with Oumi’s CEO about their recent launch, and one thing he said sticked with me

“Open source AI is the future, the same way Linux dominated.”

To folks like Manos, open source AI is their conviction, which explains why Oumi’s founding team left their previous roles building closed source models, like Gemini, for this new mission. However, for other folks who haven’t taken the pill, here’s the tl;dr on the benefits of open source AI

Flexibility — finetune on proprietary data, deploy anywhere, avoid vendor lock in.

Security — code, behavior and performance are constantly under scrutiny.

Innovation — openness encourages innovation and collective advancement.

In addition, open source adoption can also bootstrap go-to-market motions. In a recent podcast, Ron Gabrisko, Chief Revenue Officer of Databricks, fondly recalled that rooms were packed on roadshows because everybody wanted to meet the star creators of Apache Spark. I bet the same would happen to DeepSeek as well.

For a more extensive reading list, I recommend the following

Open Source AI is the Path Forward by Mark Zuckerberg (CEO @ Meta)

If AI isn't truly open, it will fail us by Emmanouil (Manos) Koukoumidis (CEO @ Oumi)

Keep the code behind AI open, say two Entrepreneurs by The Economists, Martin Casado (GP @ a16z) and Ion Stoica (cofounder & chairman @ Databricks)

Now you might say — well, if open source AI is truly as amazing as you say, then why is OpenAI still closed source? (even though the wind is changing?)

What is closed source AI holding onto? Expelliarmus!

Well, it’s pretty simple — they will tell you that closed source AI makes more money is safer to humanity. The argument is that bad actors could wreak havoc with AI and there isn’t a way to turn off the facet if the model is open source. This sounds more like a protectionist propaganda to me, plus, I believe there are way more good actors who will learn how to counter bad actors equipped with AI.

So besides the safety reason, what else? Well, the biggest revenue stream for closed source AI is usage fee. If OpenAI open sourced their model, two things can happen

someone will self host instead of paying OpenAI for usage

someone will start hosting OpenAI model as a service and erode its marketshare

In other words, you would be leaving money on the table, that is — if your key revenue stream is from hosting model as a service.

This naturally brings us to the next section — how do we make money with open source AI, and are there additional revenue streams we can explore to make the business case more attractive for open source AI?

Making money with open source AI

Making money as a big corporate

Big corporates like Alibaba, Meta, and Tencent have been making a lot of moves in open source AI lately, so there must be some sort of commercial interest behind it. While Alibaba makes money from hosting its models on Alibaba Cloud, it’s not immediately obvious what Meta is after. Meta doesn’t host Llama as a service and inference providers like Together AI don’t even need to pay Meta for commercial licenses because Meta only requires it “if your services exceed 700 million monthly users.”

At first sight, it seems like Meta is doing all this because open sourcing AI encourages community innovation, which will improve Llama’s performance. A superior model will then create a better user experience through product integration, which will eventually trickle down to Meta’s earning because user stickiness is money.

However, this doesn’t feel like the complete picture to me. Luckily, I was able to find an answer in an illuminating blog post titled Laws of Tech: Commoditize Your Complement. In short, the Laws of Tech suggests the following strategy — say you are a quasi-monopoly (like Meta/Instagram) and wants to leverage AI in your product. How do you make sure that you don’t give your AI vendor too much leverage? One obvious choice is to develop your own AI. However, the real genius move is to open source it, as it levels the playing field in the AI vendor space. In other words, by open sourcing Llama, Meta has fostered competition in its upstream supply chain, reducing vendors’ leverage and hence solidifying its own position in the value chain.

Making money as a startup

Figuring out how to self sustain is crucial to open source AI startups, not only from a commercial lens, but also from an independence standpoint. If open source AI companies can’t prosper on their own, then only large corporates like Meta or Alibaba can afford to open source models, which will be inevitably biased by their own priorities.

Let’s survey the top open source AI startups and see how they are making money

Mistral

usage fee from hosted model (1P and 3P like AWS Bedrock)

subscription fee for applications like Le Chat Pro

professional service like model customization

commercial license for premier models like Codestral

DeepSeek

usage fee from hosted model

Genmo (Open Source Video Model)

subscription fee for hosted model access

OUMI

professional service like model customization, training and deployment

Unsloth

paid tier for premium features like memory and speed optimization

Charging for hosted model access isn’t the greatest business strategy because that’s not a model provider’s core competency. There will be other companies, like AWS or Together AI, who have a deeper expertise as an inference provider. Moreover, users might also find those inference providers more flexible because they tend to offer many models at the same.

The better way to make money on open source projects is to build premium offerings on top of the open core, such as

Service: RedHat makes a huge chunk of its money from its enterprise support

Premium Feature: MongoDB is built on the MongoDB NoSQL database but offers advanced features like enterprise security and query optimization. It brought in $1.68 billion last year and has a market cap of $21 billion.

Certification: Linux Professional Institute offers vendor-neutral Linux certification, which in estimate brings in $17mm per year

Similarly, we may have the following revenue streams for open source AI startups

Service: help with customization, deployment, etc

Premium Features: finetuning, RAG, agents, memory or performance optimization all benefit from a stronger base model.

Certification: models still have their own quirks and mastering them is a skill that could be certified

Another interesting revenue stream is licensing open source models for commercial deployments. However, if anybody can just grab your model from Hugging Face, how do you enforce the license terms?

After all, you don’t want people to pirate your models, right?

How to enforce model license

In order to enforce model license, you must first be able to detect who’s using or modifying your model. Without this capability, it really undermines the defensibility of the open source licensing strategy.

After all, how can IP owners possibly enforce copyright if YouTube doesn’t know how to detect infringement?

There are 2 main ways to detect model infringement

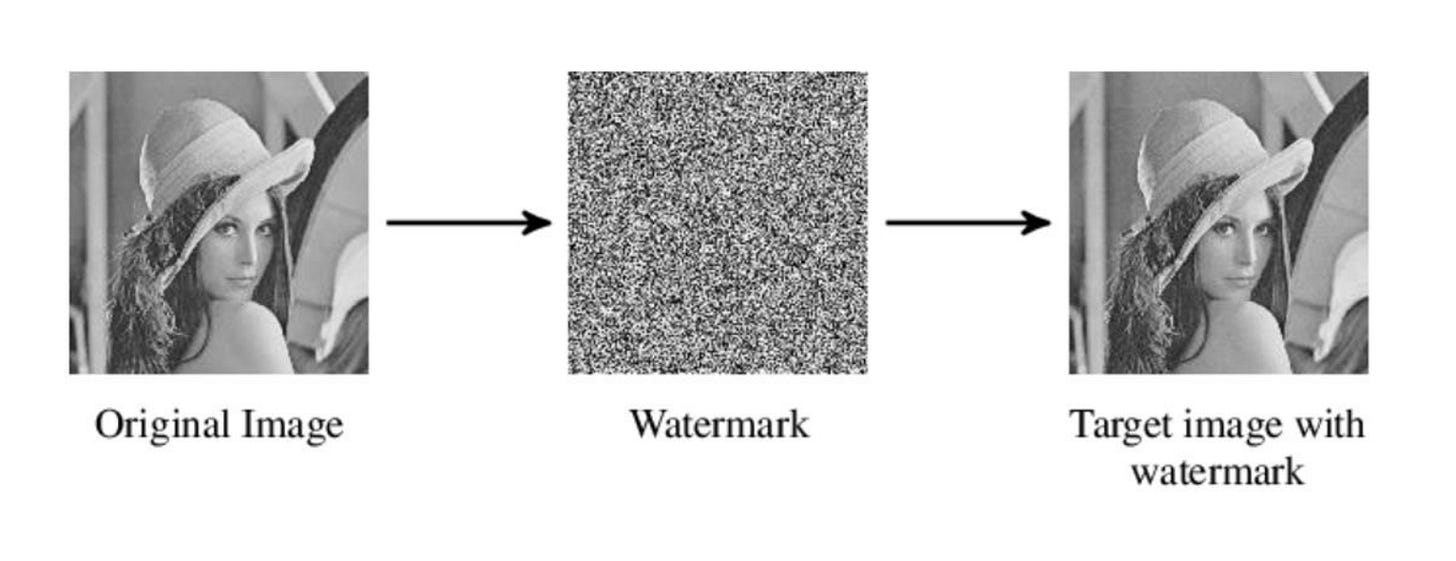

Output watermark: detect whether someone is using your model as is

Model watermark: detect whether someone finetuned/distilled your model

Output Watermark

Google’s SynthID “watermarks and identifies AI-generated content by embedding digital watermarks directly into AI-generated images, audio, text or video.” On a broad stroke, SynthID works in the following ways

For text outputs, it tweaks the probability of each token it generates without affecting the output quality, creating a statistical fingerprint that can be detected

For audiovisual outputs, it does clever signal processing tricks that adds in signatures imperceptible to human.

Other ways to water mark text output includes

Green red watermark: like how SynthID operates but it suffers from the tradeoff between detectability & quality if the probability disturbance is too large

Gumbel watermark: it does not perturb the output distribution

With output watermark, open source AI companies can routinely scan the Internet for watermarked output and take actions accordingly. However, what happens if the model pirates are not using your original model as is, but a slightly modified version through finetuning or distillation? Model watermark comes to rescue!

Model Watermark

This technique is becoming increasingly relevant as model distillation gets easier. While DeepSeek shocked the world with its $6mm training cost, another team soon distilled the DeepSeek model with less than $50 and got extremely close to DeepSeek R1 in benchmarks like MATH500. While DeepSeek might be fine with this, other AI companies like OpenAI and Anthropic both prohibit others to distill their models.

Keen readers might question why we need model watermark when we already have output watermark. Since distilled model should more or less inherit the behavior of the original model, wouldn’t the output watermark be preserved?

To some degree it’s true, but the output watermark can be easily removed by a counter technique called ensemble distillation, where averaging the outputs of multiple models can significantly reduce or even erase the watermarks, which necessitates model watermarks.

One of the key paper in this field, Protecting Language Generation Models via Invisible Watermark, introduced a model watermark mechanism called GINSEW. The basic idea of GINSEW is similar to output watermark — However, instead of injecting a generic probability perturbation, GINSEW uses a sinusoidal signal perturbation, which is difficult to erase by the averaging process during ensemble distillation.

I reached out to Xuandong Zhao, first author of GINSEW, for additional comment. Zhao mentioned that while the GINSEW results are promising, it has only been tested on smaller models (<1B parameters). As a summary, Zhao said

“Watermarking could help detect fine-tuning, but it remains an open problem that still needs to be solved.

Fine-tuning is relatively easier to detect; for instance, we can introduce backdoors or modify specific parameters in ways that remain stable when fine-tuned, enabling detection.

For distillation, detection is much more challenging. If someone distills just 10 samples, given the billions of parameters in a model, it is nearly impossible to detect. However, some approaches might work if the distilled dataset is large enough, making detection more feasible.”

Conclusion

As you can see, there isn’t a perfect solution yet. If you ask me to rank the open source commercialization strategy for startups, I’d say

licensing to 3P inference provider > service / become 1P inference provider / premium feature > certification

I know that VCs hate to hear the word “service”, but each model has its own quirk and who has a better insight on how to tame of fix those quirks than the team that built this model? This is especially true for image or video models, which is why I think the Moonvalley x Asteria partnership is so interesting.

Normally, premium features would be ranked as a very effective commercialization strategy for open source teams. Imagine if Llama were the only LLM, then building advanced feature like finetuning or RAG on top of Llama makes sense. However, given that there are so many model providers out there, the opportunity to monetize through advanced features is slim as there are plenty horizontal players.

While the commercialization strategy for open source AI evolves, I really do hope we can unite behind the open source mission and stop spending millions of dollars, energy and intellect recreating the same wheel. In the end, the real sorcery of open-source AI isn’t just in the code—it’s in the collective drive to push the boundaries of innovation together.

Somebody please kill the idealism in me, and yea, subscribe to make me feel needed.