bRO Do yOu eVEN kNOw ComfyUI?

if not, you should

Ok, what the heck is ComfyUI and what exactly makes it so comfy? Well…according to the official definition on their website, ComfyUI is

The most powerful open source node-based application for creating images, videos, and audio with GenAI.

Fun fact: the name comfy came about because its creator was generating AI art with Stable Diffusion and people said his images had a comfy feeling. I don’t know exactly what that means but this comes to mind.

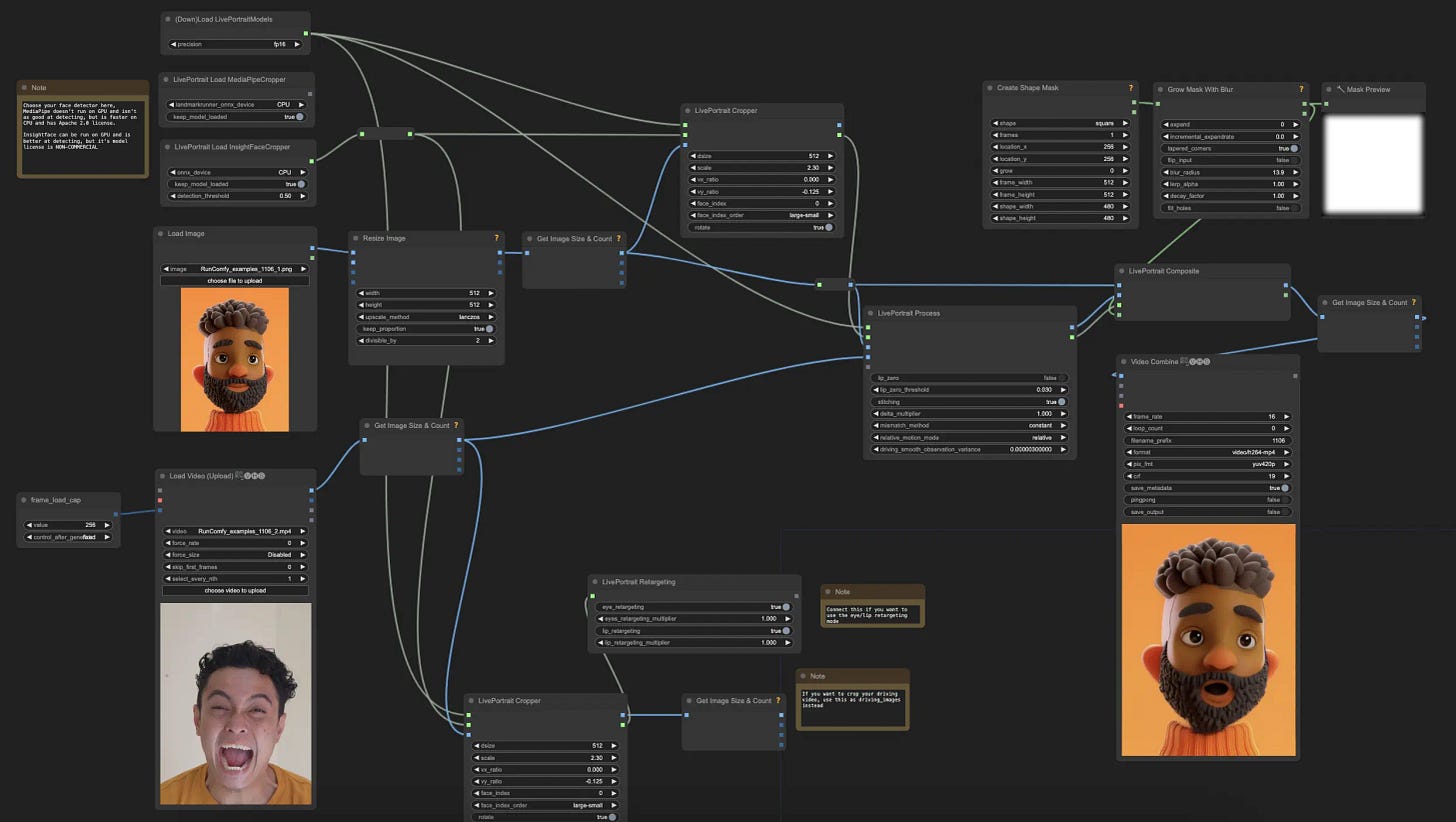

Still don’t know what it does? Fine, let’s see if this helps — this is what ComfyUI looks like for a Live Portrait workflow.

If you still don’t get it, don’t worry! You are not dumb! You most likely don’t understand ComfyUI because you don’t fully understand the problem it’s solving for, that’s what this blog is for.

What’s your problem?

Suppose you are an artist — and let’s just say you have impeccable taste and outstanding vision already — what stands between you and greatness? The answer is quite simple

The boundary of your creativity is defined by your tools.

So, how does ComfyUI help unleash our creativity? Let’s start from the beginning. What is the one thing that AI content creators or AI artists do the most? It’s clicking that generate button over and over again until you tweak your way to a clip you like. If you are using a closed source video generation model like Runway or Pika, your bill can stack up quickly. When your money runs out, you no longer have access to those tools, so now you turn your attention towards publicly available models like Genmo or Hunyuan.

While these models are free, often times you don’t want to just run it vanilla because then you hardly stand out from the crowd and that is unacceptable! Artists want to have their own magic sauce, so they might add additional preprocessing or post-processing steps like inpainting, outpainting, noise, denoise, LoRA, upscaling, area composition, controlnet, live portraits and the list goes on. What’s more, these steps all come with its own set of parameters that you can tweak. The point is, you start to realize that this is really a modular and parametric process.

At this point, there are two options to present this modular workflow

it could use a linear notebook style like Google Colab, or

it could use something like a flow chart.

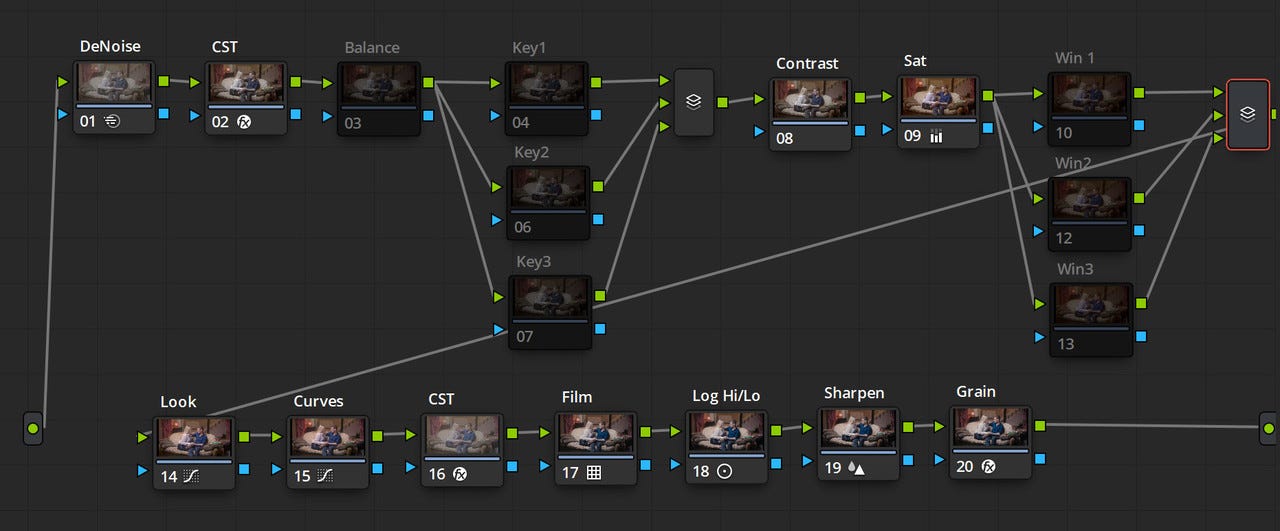

Those two representations are formally equivalent, but ComfyUI’s team made a smart choice to adopt a node-based drag-and-drop UI as many content creators are used to node-based workflows with color grading tools like Davinci Resolve.

It’s quite mesmerizing to admire a complicated ComfyUI workflow, but ComfyUI is more than what meets the eye. Remember, the ComfyUI backend is the engine that actually keeps everything running. Content creators don’t often have access to large clusters of GPU, so ComfyUI team spent a lot of time optimizing the backend (e.g. smart memory management during model loading) so that many workflows can run smoothly even without a RTX4090.

Lastly, the open source nature of ComfyUI means you can push the boundary as you like! If you don’t like something or need something, go build it!

Is Comfy in a Comfy spot?

Yea, duh. Just look at their stars!!! ComfyUI is currently on the GitHub Top 150 list, and it has 10,000+ custom nodes written by the community members, which really goes to show how much ecosystem adoption it had gathered almost organically.

But what really is the best indicator of success for an open source project? Is it really the number of GitHub stars? Is it really the number of active contributors? If it were up to me, I’d say those are all just scratching the surface. What you can’t fake is how drunk people are at its community meetup. I, for one, was pretty drunk. Incredibly unprofessional, I know, but I was just really thirsty from all that talking. The event enjoyed a wide spectrum of attendees — filmmakers like Paul Trillo, brand experience agency like Appmana, AI motion graphics startup like glyf, AI film competition like Project Odyssey, and boring BD people like me.

Here’s a recap of all the interesting things from the event, from my best recollection, which is not very good.

Met one of my favorite YouTubers, Theoretically Media! This man probably spends 25 hours per day on experimenting with AI video workflows.

Third time running into Teslanaut! Give him a follow! Here’s my favorite shot from his latest work Daydreaming.

Sean, Head of Engineering at BentoML, demoed a really nifty tool called Comfypack, which makes it super easy to share and run ComfyUI workflows by packaging everything you need to run a workflow such as model versioning, custom node, python dependencies, etc. In short, it’s like a docker-compose file for ComfyUI and it allows artists to spend more time creating and less time wrestling with the dependencies.

Next Up

So what’s next for ComfyUI?

Hiring! They are hiring across the board in design, engineering and ops. This is a great team and they know how to have fun.

New functionality like better Mac compatibility, training capability, notebook mode, subgraphs and easier collaboration.

That’s it for now. As always, please subscribe and make me feel needed, thanks.